Past Projects

Image Reconstruction for ngEHT

The ngEHT is a significant upgrade to the original EHT project; it aims to construct images and movies of black holes

using VLBI with an expanded array of telescopes. I pursued several projects for the

ngEHT Analysis Challenges, an effort to encourage the development of novel imaging

algorithms trained on synthetic datasets. These datasets are intended to simulate

realistic ngEHT observing conditions, including systematic errors such as thermal

noise, antenna pointing offsets, and atmospheric turbulence. For the challenges, I

provided image reconstructions of total intensity and polarized images for multiple

ngEHT observing frequencies using the standard eht-imaging package. You can see one

of my total intensity image reconstructions in

this ngEHT special issue of Galaxies.

On a personal level, it was a

Testing a Flexible Disk Emission Model

The morphology and kinematics of a protoplanetary disk are fundamental properties to constrain in characterizing

the dynamics of a disk, and thus its formation. Current spectral line emission models are not optimized for quickly

obtaining these physical parameters from an observation. An emission model in preparation by Andrews et al. aims to

quantify the morphology and kinematics of an observation using statistical inference and Bayesian techniques.

The morphology and kinematics of a protoplanetary disk are fundamental properties to constrain in characterizing

the dynamics of a disk, and thus its formation. Current spectral line emission models are not optimized for quickly

obtaining these physical parameters from an observation. An emission model in preparation by Andrews et al. aims to

quantify the morphology and kinematics of an observation using statistical inference and Bayesian techniques.

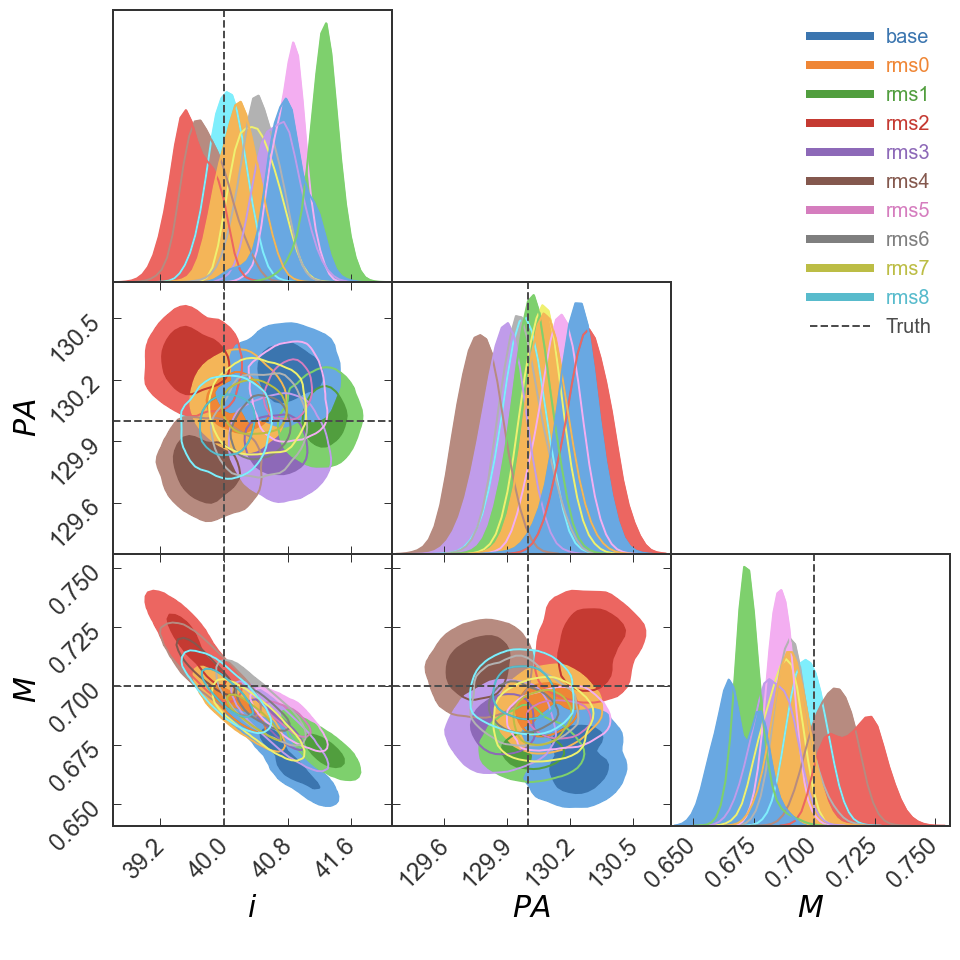

Using simulated ALMA observations, I ran MCMC retrieval tests on datasets that varied in stellar mass, noise, and spectral

and spatial resolution to fit for parameters like the inclination and brightness temperature of a disk. In collaboration with

Dr. Feng Long, I modeled the physical properties of the circumbinary disk around the binary star V892 Tau, and determined values

for the stellar mass, inclination, and position angle of the disk. You can see our results of our analysis of V892 Tau here.

CERN and Fermilab

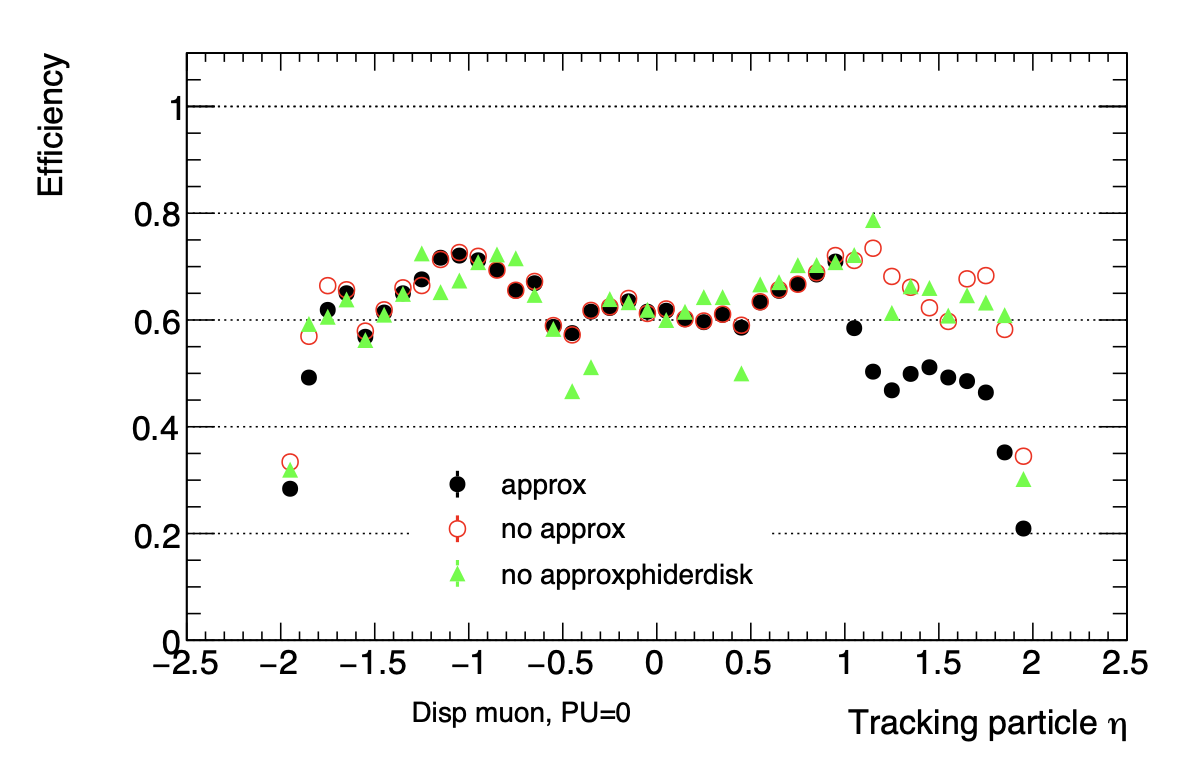

My past research in experimental high-energy physics fall under the realm of software development and testing.

My past research in experimental high-energy physics fall under the realm of software development and testing.

At

Northeastern, I worked on the track-trigger system of the CMS experiment at CERN.

My work investigated a known issue in their particle trajectory-finding software

when enabling a setting that approximated all mathematical calculations in the software.

This setting simulated how the tracking software would perform on hardware components, which is how the tracking will be implemented

in the future upgrade to the High-Luminosity LHC. What was the cause of the issue? About one line of code out of thousands. How long

did it take to find it? About three months. That one fix (along with some others) improved the efficiency of the track-finding software

in reconstructing particle trajectories in simulated collisions.

As a part of the DOE SULI Program, I developed a data reduction and analysis software

pipeline for the REDTOP experiment at Fermilab.

The REDTOP experiment is a proposed detector experiment at Fermilab that aims to study fundamental symmetry violations in physics by

capturing and analyzing the decay products of the η meson. My work involved reducing and analyzing data collected from test beams to

understand the performance of the photomultipliers in the novel dual-readout calorimeter.